Welcome to Getting Started: Core Concepts. In this video, we’re going to explain all of the core concepts of the Kibsi platform. Understanding these core concepts will make it easier for you to build your own computer vision applications and get the most out of using Kibsi. Watch our video below to learn more.

Video transcription

Hi, I’m Eric from Kibsi, and welcome to our Getting Started series.

In this video, we’re going to explain all of the core concepts of the Kibsi platform.

Kibsi Data Models

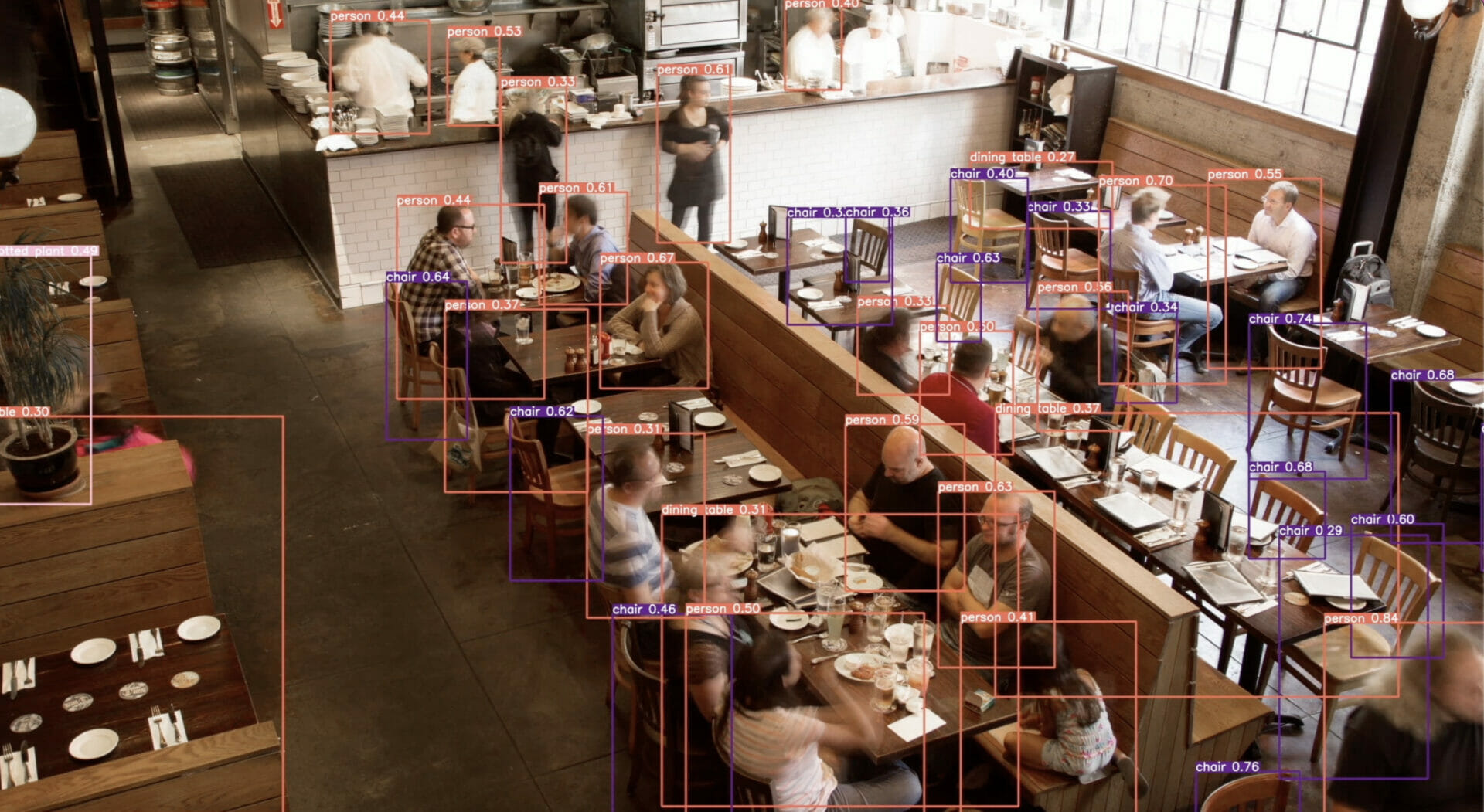

The Kibsi data model is the most important concept to consider when building a computer vision application with Kibsi. In order to illustrate what the data model is, let’s take a moment to first talk about what the data model is not. You’ve probably seen computer vision applications that look a lot like this, drawing bounding boxes around detections of people, chairs, and tables in a restaurant setting, or detecting things like airplanes and service trucks at an airport gate, or detecting all kinds of objects in a warehouse.

What these kinds of applications have in common is that they are focused mostly on detections, and this emphasis on individual detections causes these applications to miss all of the important context around the detections.

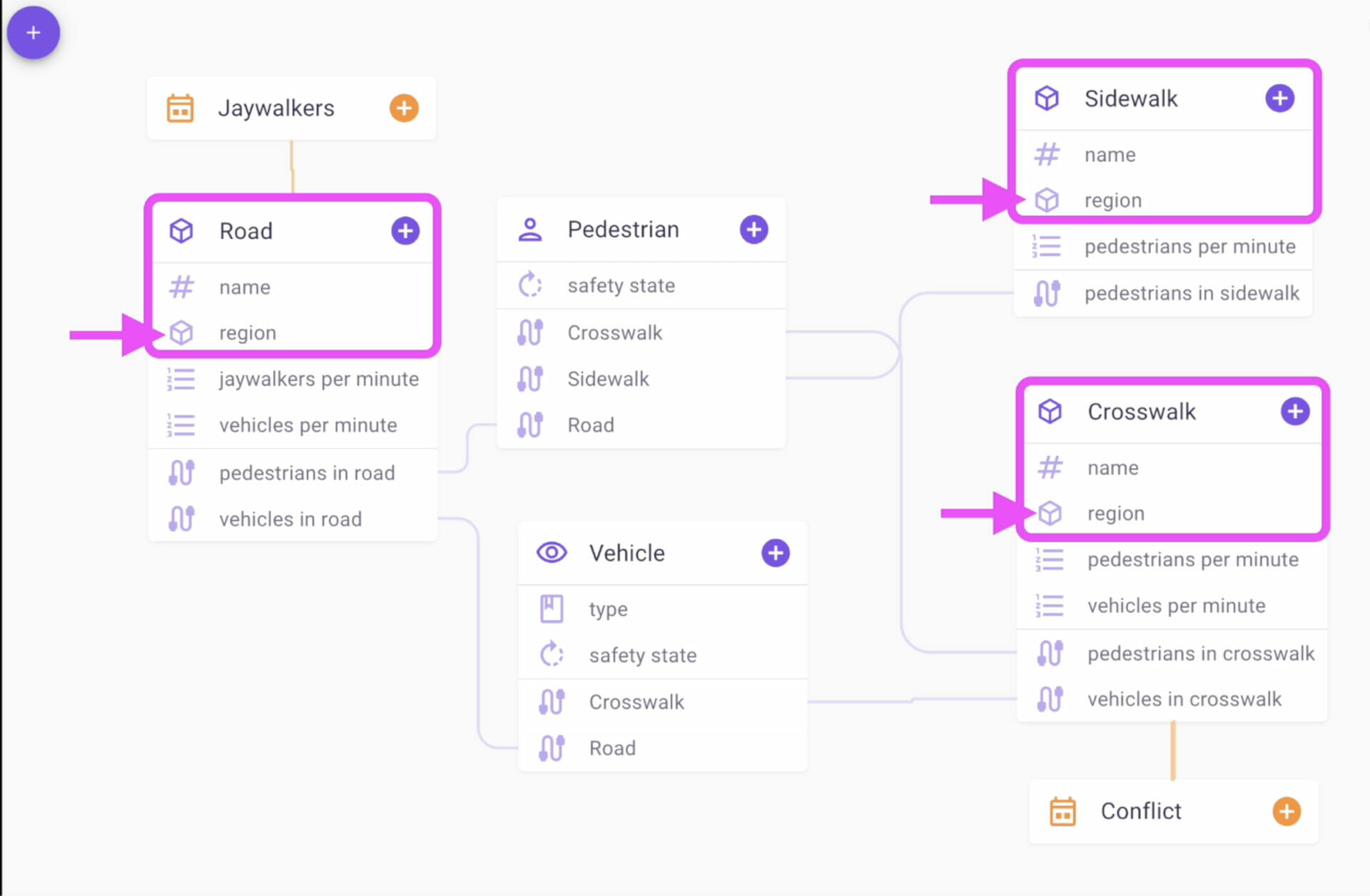

Now, let’s look at each of these scenes again but this time through the lens of the Kibsi Data Model. In our restaurant scene, Kibsi can still make all the same detections but also add meaningful context by giving our tables numbers and grouping them into stations, determining the state of the table (whether it’s seated, empty, or ready to take an order), and then take that data and reason on top of it to answer questions like “How long did our customers wait to be seated or to place their order?” or “How can we be notified whenever it takes too long for a table to receive their food?”

In our airport scene again, Kibsi will detect airplanes and trucks on the tarmac, but we need to correlate data like tail numbers, flight numbers, and gate numbers and determine when the fuel truck started and finished fueling the plane, or when the catering truck arrived and started loading, or when the baggage trucks finished loading. Once we have that data, we can answer questions like “What ground operations are causing the most departure delays?” or “How can we improve the turn times of our aircraft at the gate?”

Back in our warehouse scene, Kibsi can still detect forklifts and operators, but what if we want to solve a real problem like route optimization? We can capture a rich set of data like which forklifts are carrying which items, where they are headed, and which aisles are blocked. Once we have that data, we can determine the optimal routes for forklift operators to take to maximize the efficiency of each pickup, route, and dropoff.

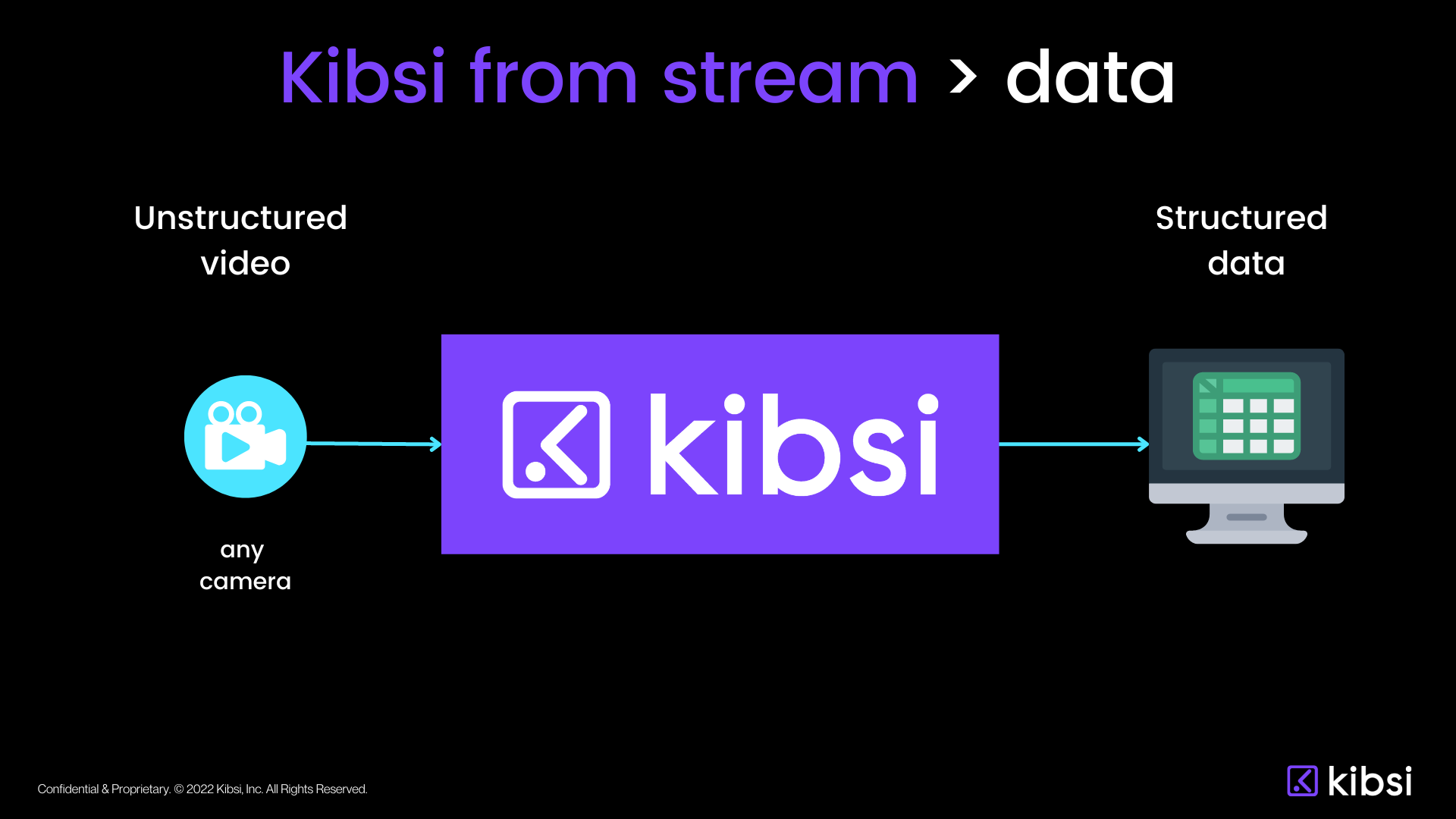

In these three examples, you can see that focusing on individual detections doesn’t tell us the whole story about our data. But when we apply the thinking of the Kibsi data model, we transform our video stream into a real-time, structured data source that contains all the context and all data that we need to solve our problem.

Kibsi Applications

Remembering that the data model is the most important Kibsi concept, a Kibsi application is what we use to define a Data Model.

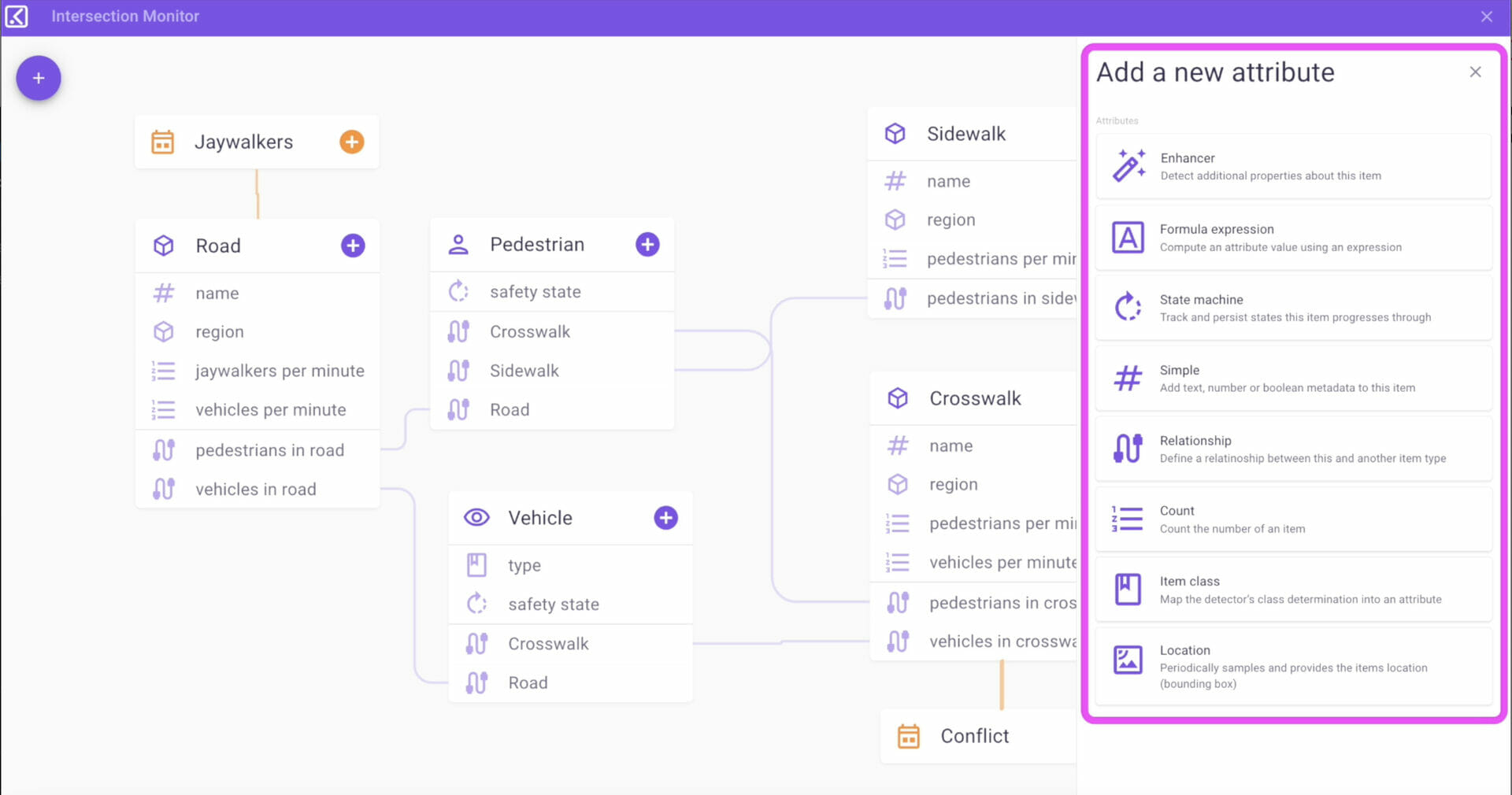

Kibsi Applications are built using three types of building blocks:

- Static item types

- Detected item types

- Events

Notice that in our application, we define item types, not individual items. The individual static items and detect items themselves are what we will see in our data stream after we deploy our Kibsi application, and we’ll see them because we defined those item types in our application.

Let’s go through each of the three building blocks.

Static item types

Static item types are for data points that will always exist in the stream. They will usually, but not always, include one or more region attributes used to associate a name with a physical region that’s visible in the stream. Now, you can create whatever static item types you need to build your data model, but some examples are things like tables, parking spots, conveyor belts, and doors, things that don’t need to be detected because they always exist in the stream.

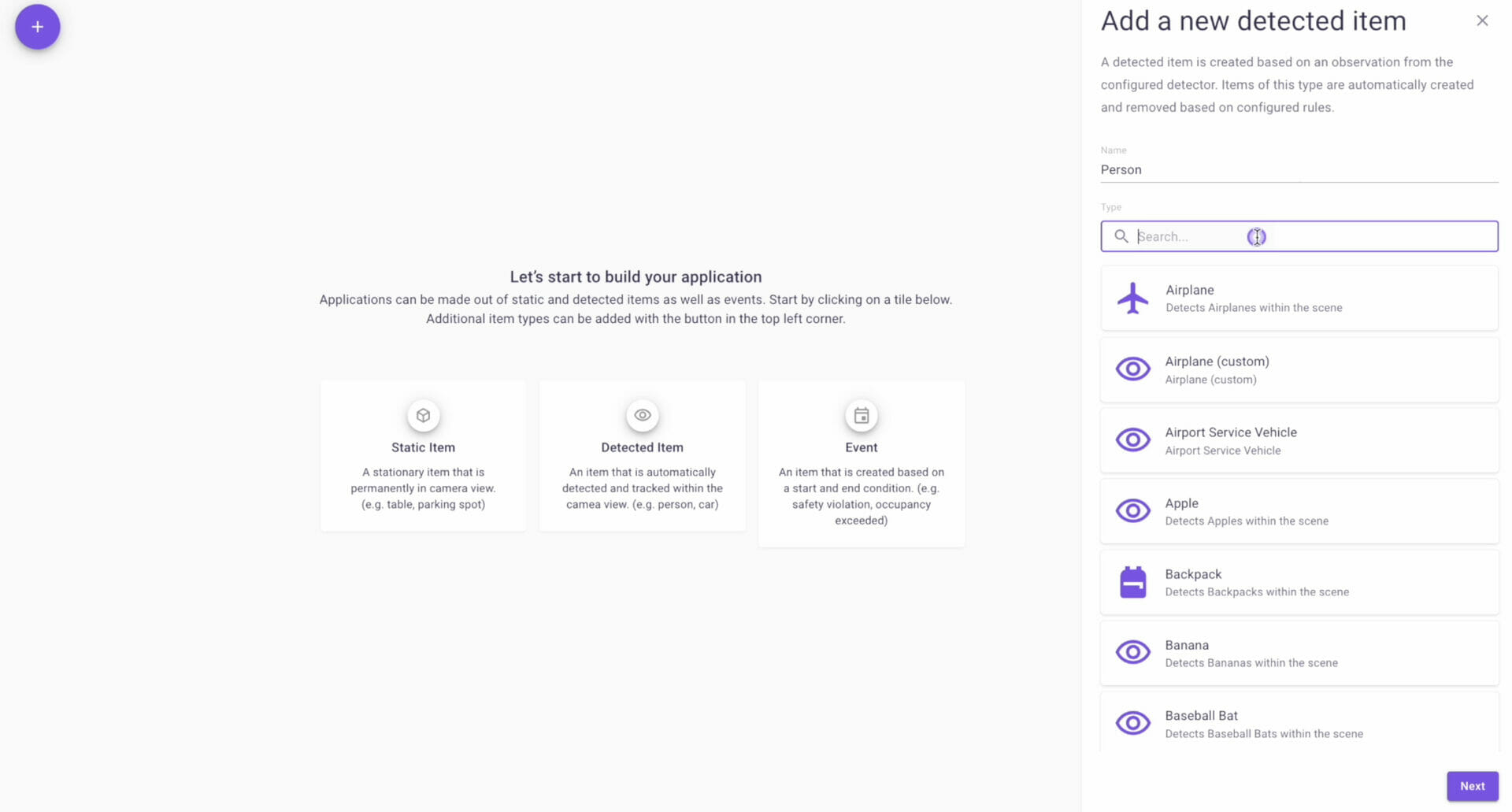

Detected item types

Detected Item Types are for things that we want to detect dynamically using a machine learning computer vision model that’s been trained to detect a specific class of object. Kibsi has an extensive library of pre-trained models built right into the platform for things like people, animals, many different kinds of vehicles, and a variety of common objects.

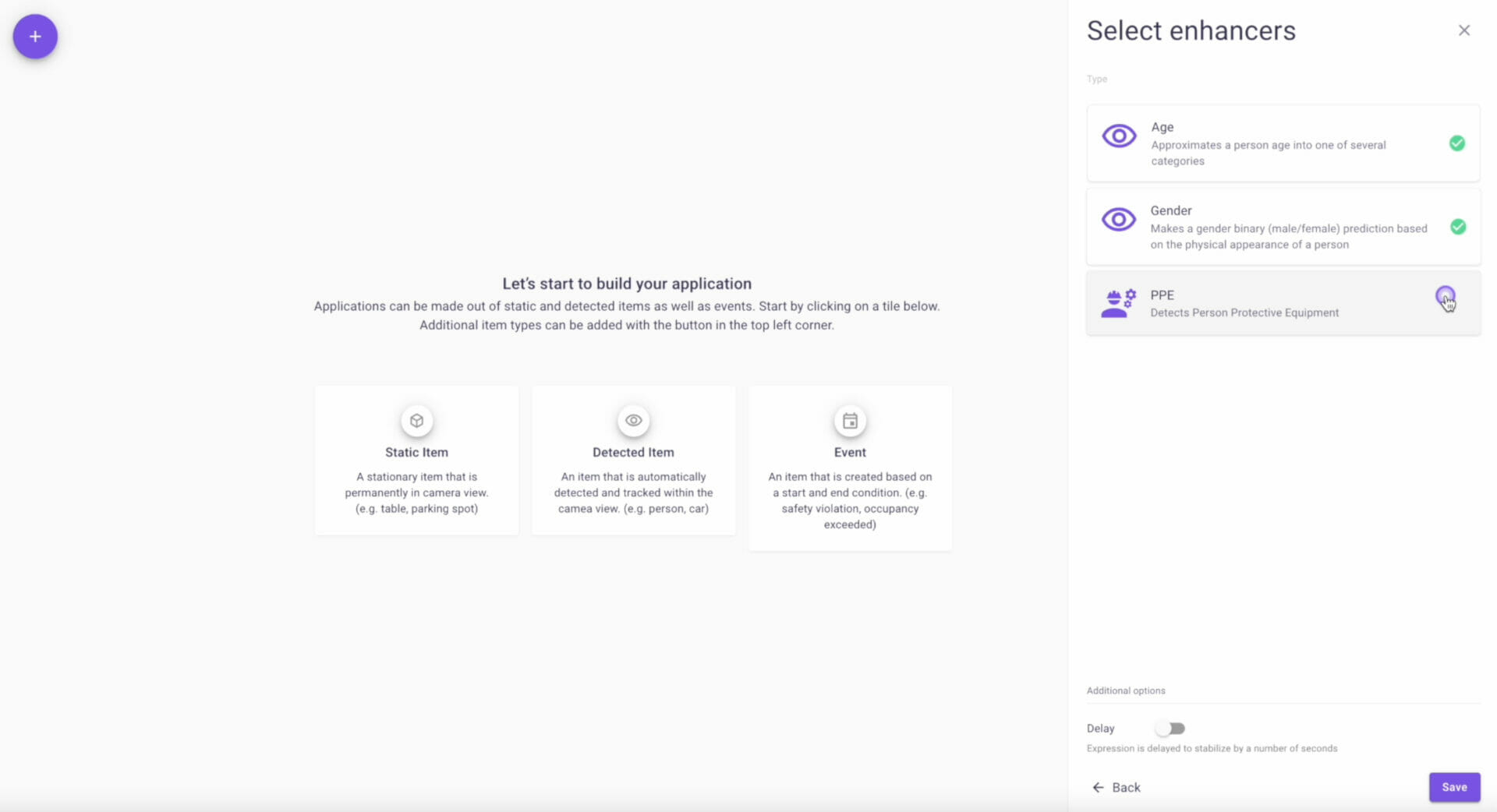

Some models are accompanied by what we call an enhancer. Enhancers are also machine learning models trained to detect additional data about the class. For example, the Kibsi person detector has enhancers that can detect age, gender, or even if a person is wearing personal protective equipment (PPE).

The relationship between detected items and enhancers, using the person detector as an example, is a good way to illustrate the Kibsi data model in action. First and foremost, we’re capturing a record in our data for each detected person, regardless of anything else about that person. And then we are modifying the person record by adding an attribute to the data that defines the person to indicate their age, gender, or PPE state, just like the columns and rows you would see in a database.

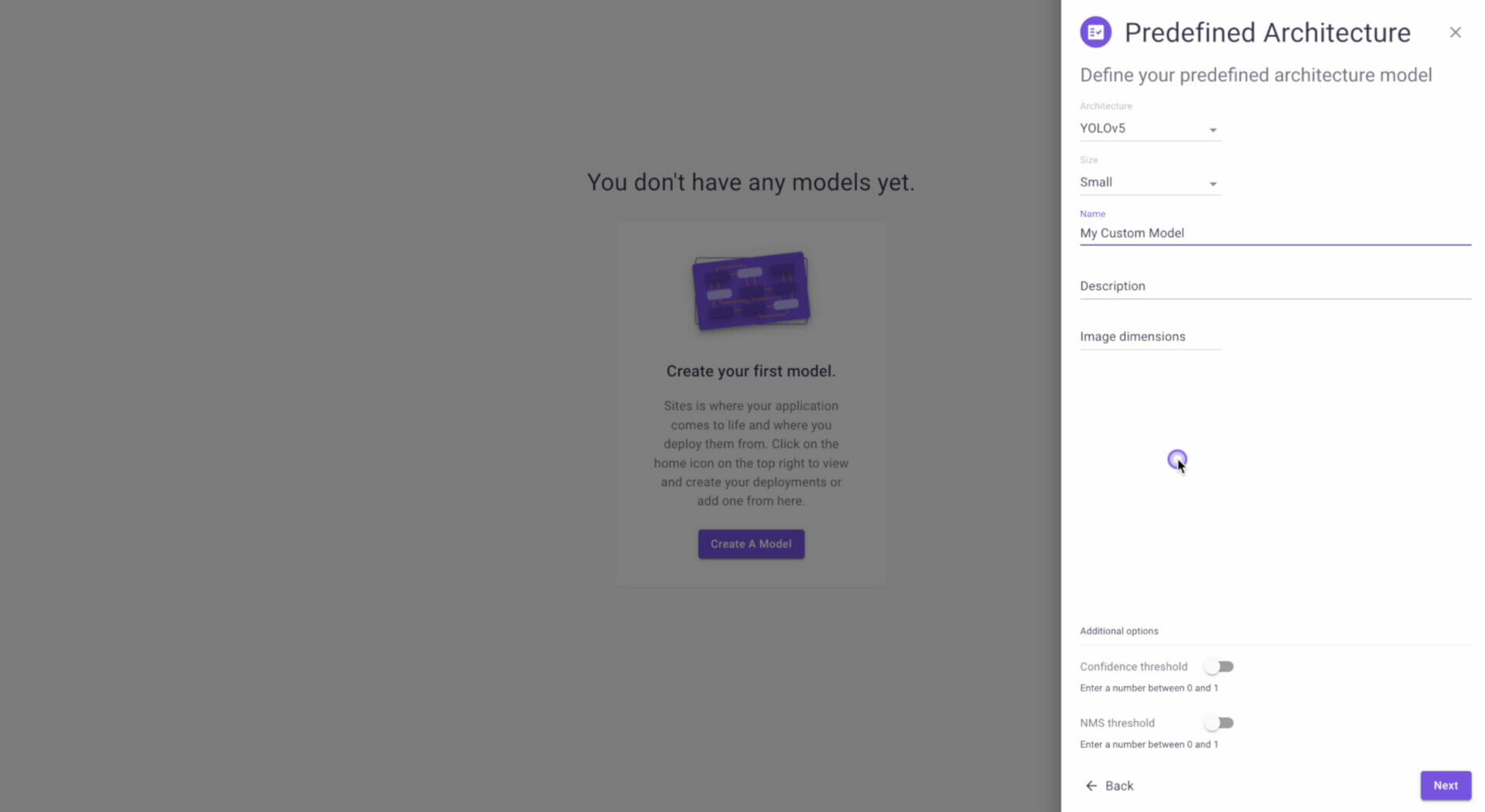

In addition to the library of pre-trained detectors, Kibsi also supports custom detectors so you can bring your own models to the Kibsi platform trained on your own video data and detect anything in your Kibsi application. Later in this series, we’ll show you step by step how to train and import your own custom detectors.

Event

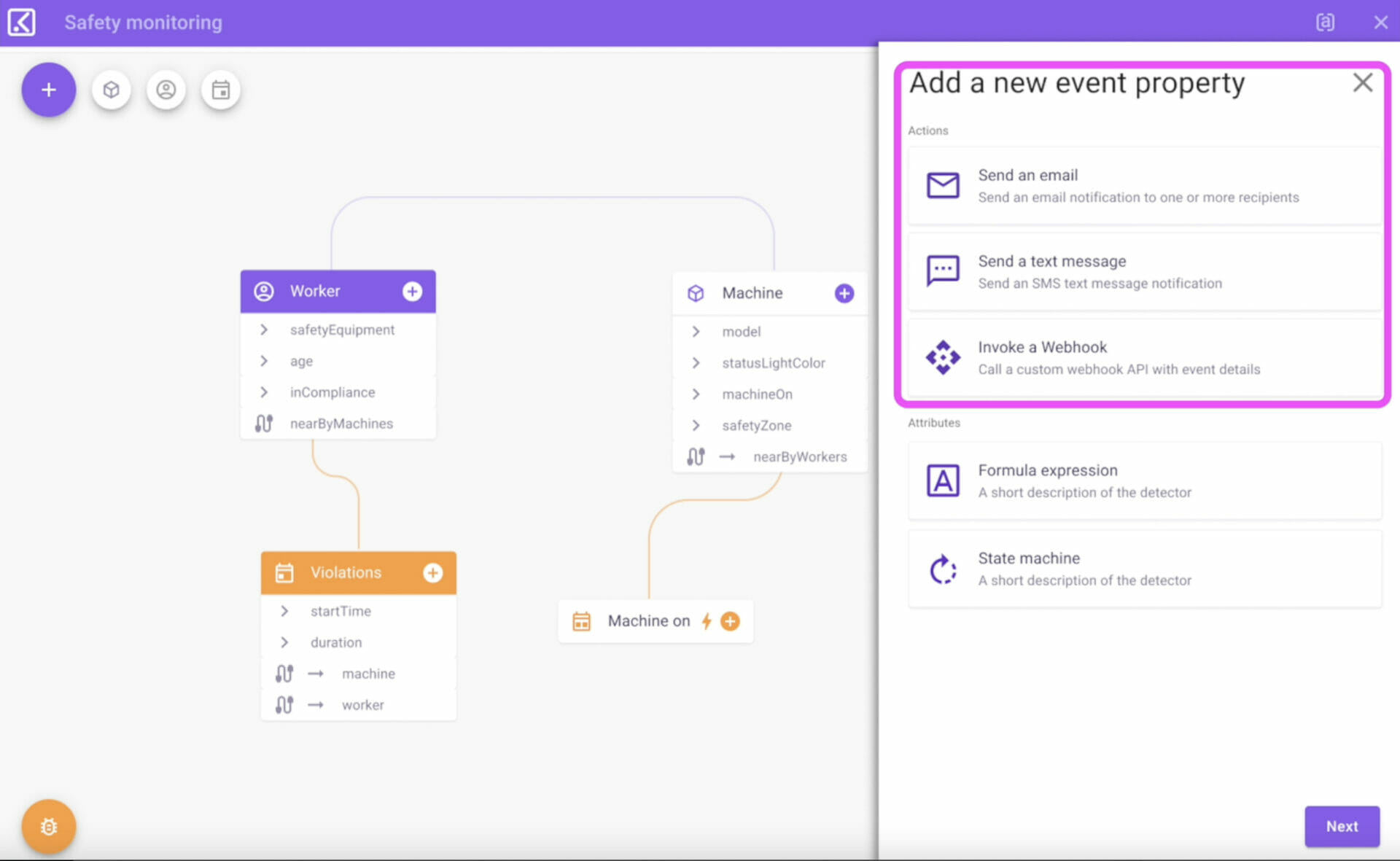

Events allow us to define start and end conditions from our data, and when those conditions are triggered, Kibsi not only captures the data for that event but can also automate certain actions like taking a snapshot image or short video clip of the event when it occurs, or sending an email, text message, or triggering a webhook to integrate with third party systems.

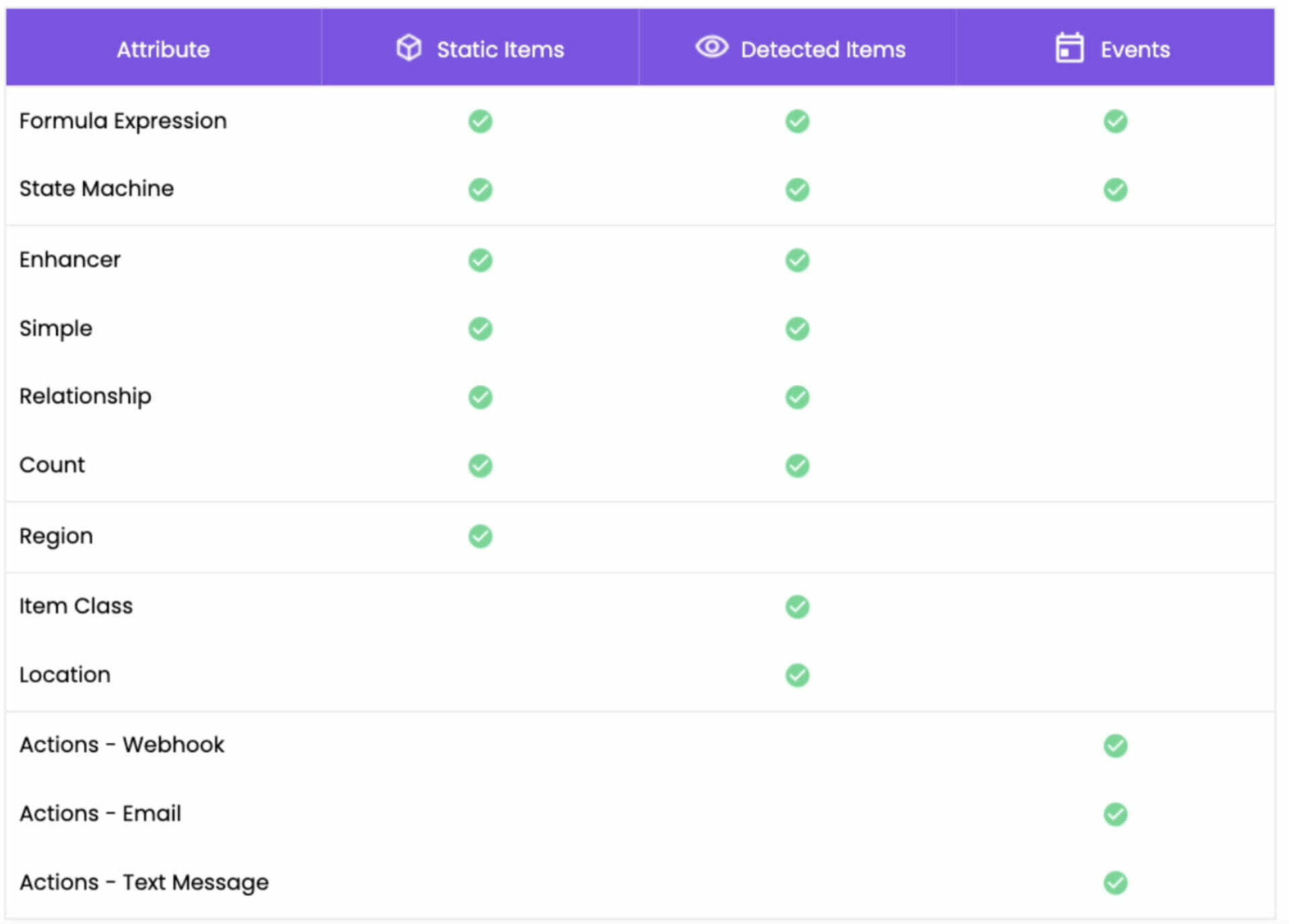

Attributes

Our three main building blocks of static item types, detected item types, and events can all be further customized by adding attributes. Here’s a look at the different types of attributes and the building blocks that support them.

Attributes represent some of the most powerful features in the Kibsi platform, things like relationships, regions, counts, and other attributes as well as the expressions used to customize them. Some attributes even allow you to enable tracking, which will show the first, current, and last values of the attribute. These can all be used together to build rich data models and application logic ranging from simple to complex. We’ll do a deep dive on attributes in a later Getting Started video.

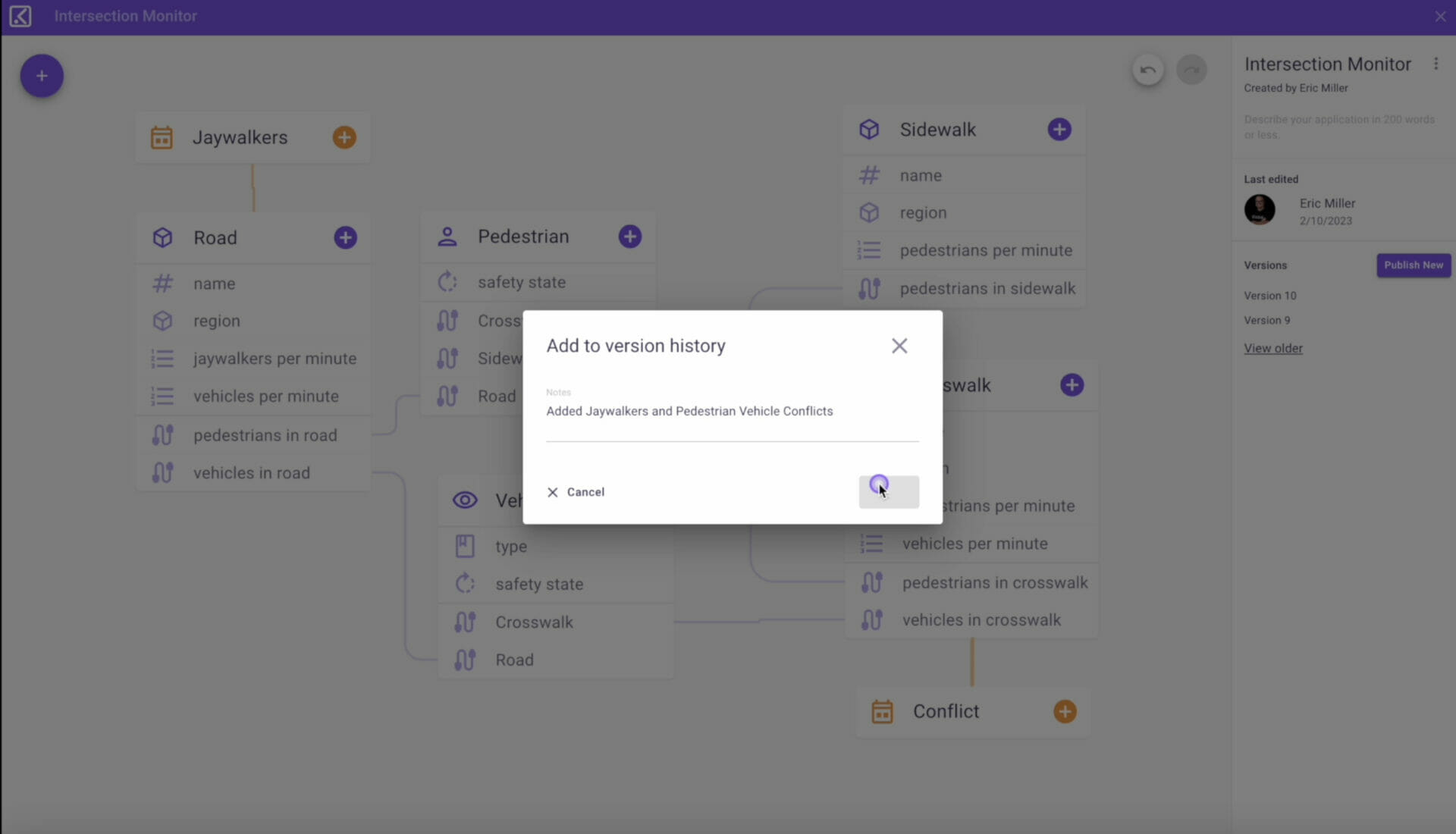

Once you’ve used static and detected item types, events, and attributes to build a Kibsi application for the data model you want, you can publish a version of your application. Publishing your app allows you to specify a specific application version when defining a deployment later on. It also allows you to add new features to your application and carefully test those features on a single camera before doing a larger deployment and always have the ability to safely roll back to a previous version if something unexpected occurs.

Sites, streams & deployments

Sites

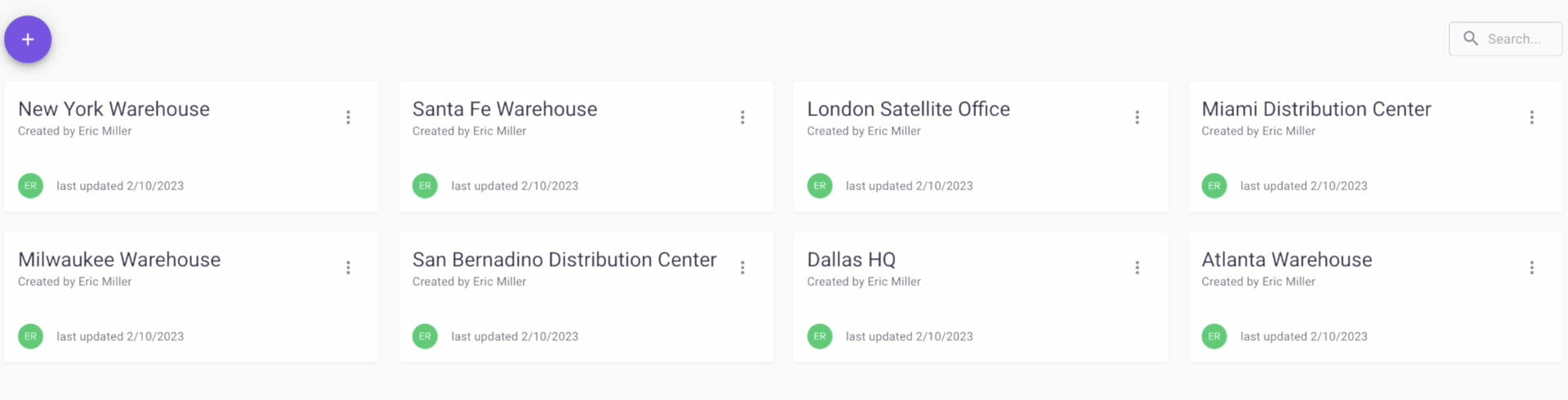

Sites in Kibsi are a way to organize your streams and deployments. How you organize your sites is completely up to you, but one common approach is to use sites to organize deployments and streams based on the physical geographic location of the cameras, such as ‘St. Louis Warehouse’ or ‘Milwaukee Plant.’ Whatever approach you decide to use, keep in mind that a Kibsi site is a logical container for both streams and deployments.

Streams

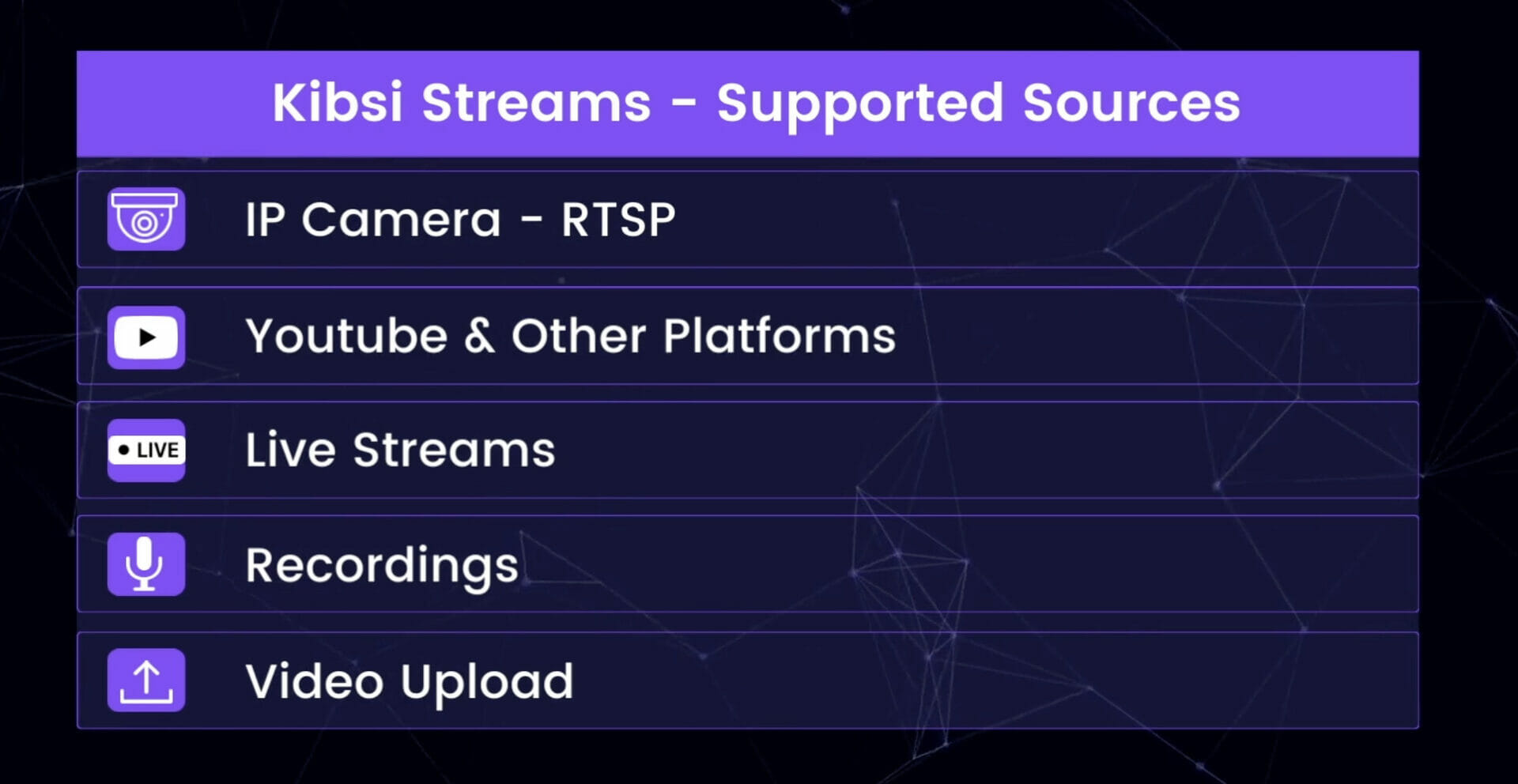

A Kibsi stream can be the IP address or hostname of any physical camera device that supports the Real-Time Streaming Protocol (RTSP), or it can be the URL of any streaming source that Kibsi supports. These include YouTube and many other video platforms, both livestream and recorded, and even support uploading recorded video files from your local computer. When configuring a stream, you can define a snapshot image, which is used during the deployment process to help with defining any static regions.

Deployments

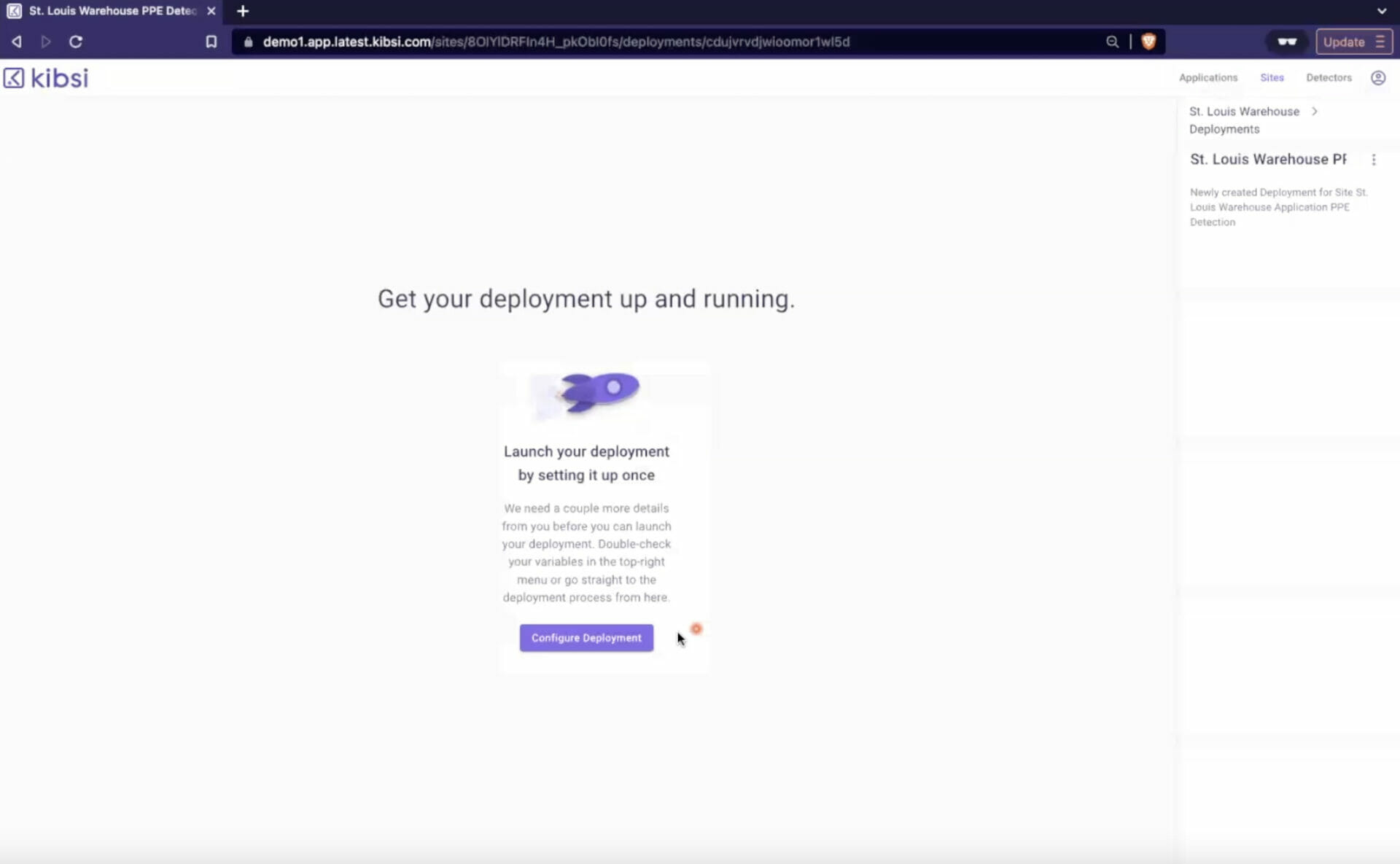

Next, let’s talk about deployments. A Deployment takes any published version of a Kibsi application and pairs it with any stream from the same site. During the deployment process, you can choose which Kibsi app you want to deploy, specify the version, choose a stream, and configure the specific static items and regions that are unique to each stream.

Once a deployment is completed, you’ll have a finished, working Kibsi application that’s turning your video stream into useful data.

Working with data

Once a deployment is running, Kibsi makes it easy to work with your data in a few different ways.

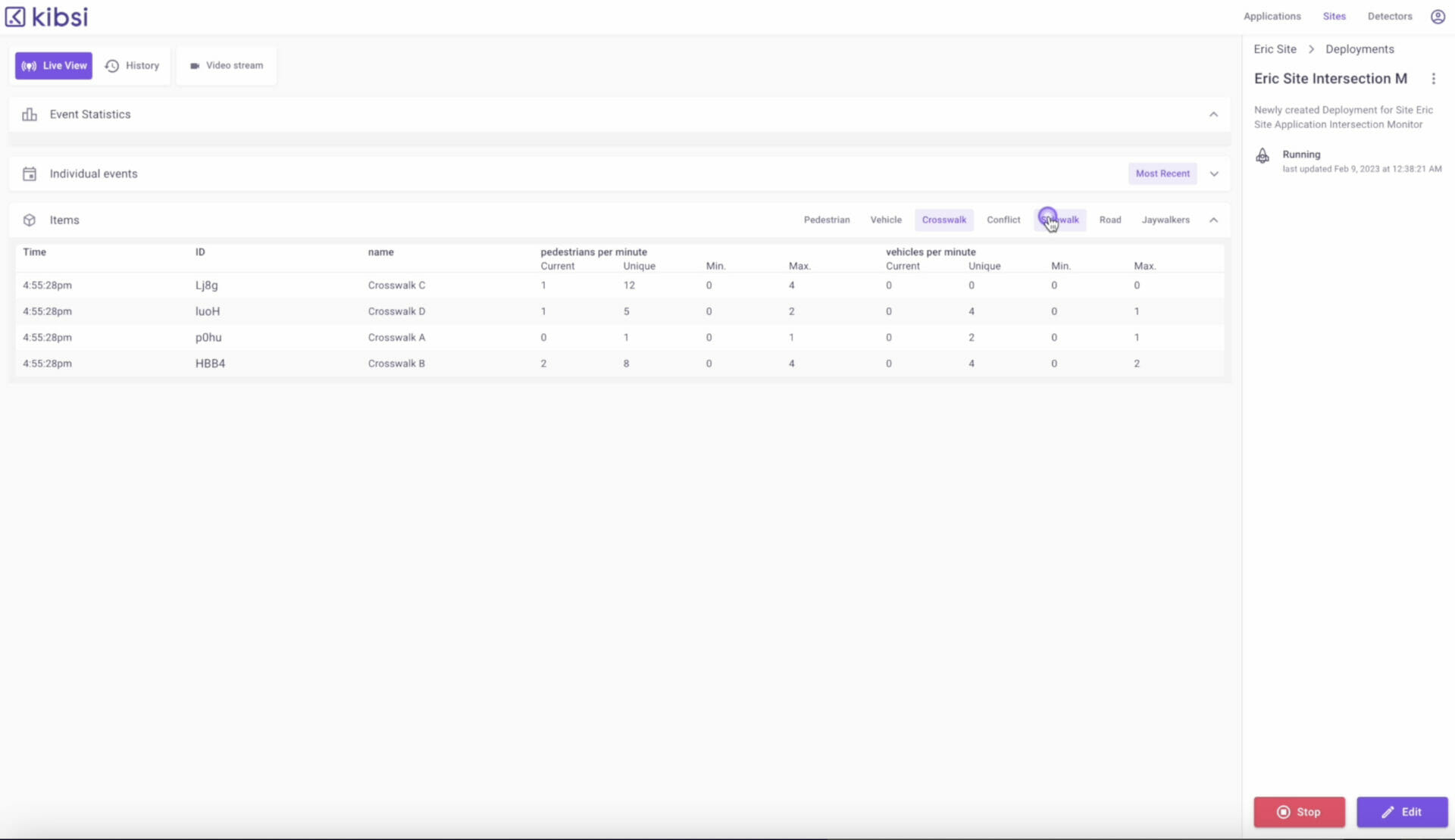

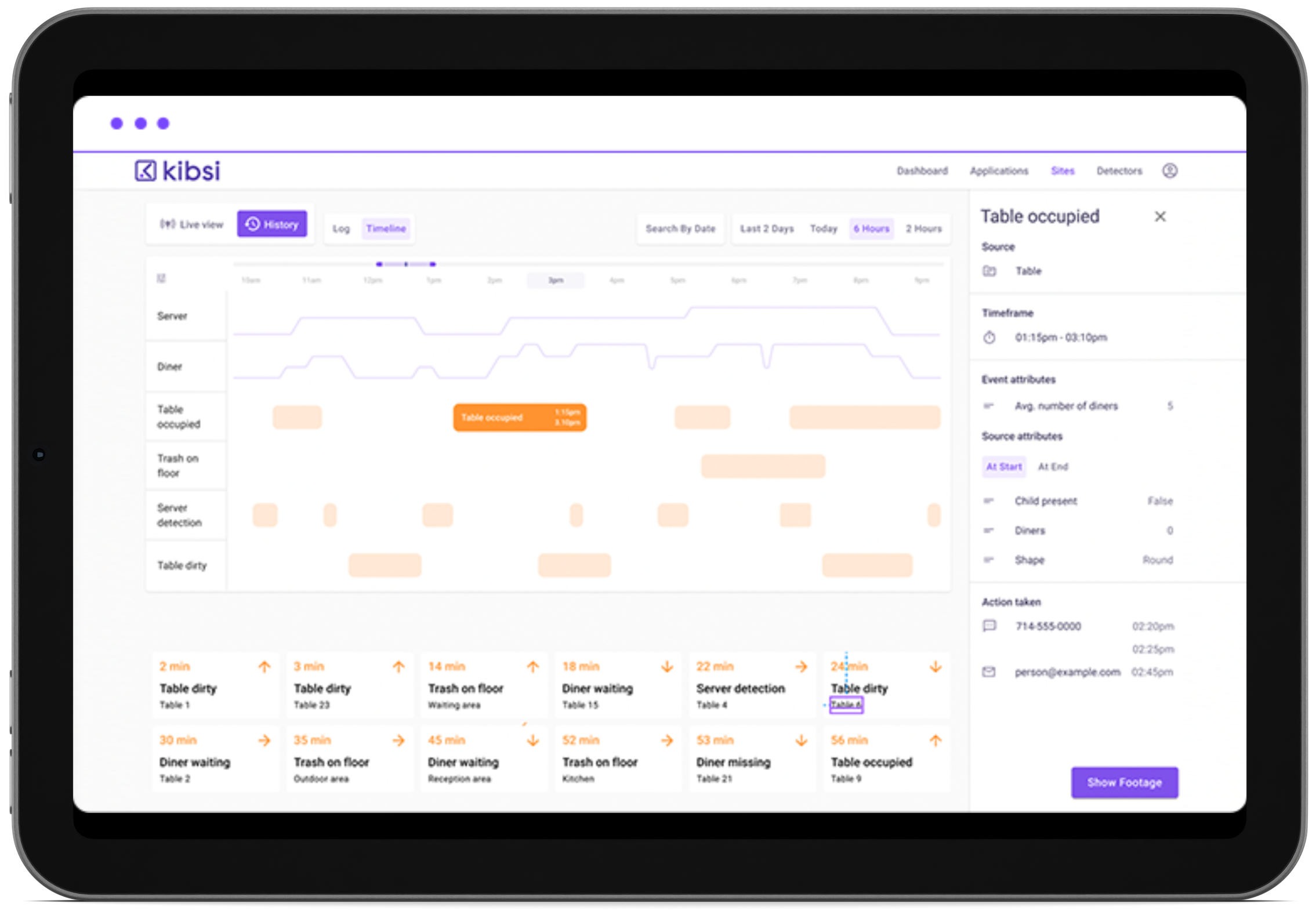

First, there’s a live view that shows a real-time stream of your data and events and includes views for both static and detected items.

Also, if your application includes any events that were configured to capture image snapshots or video clips, then opening an event from the live view will allow you to show that media.

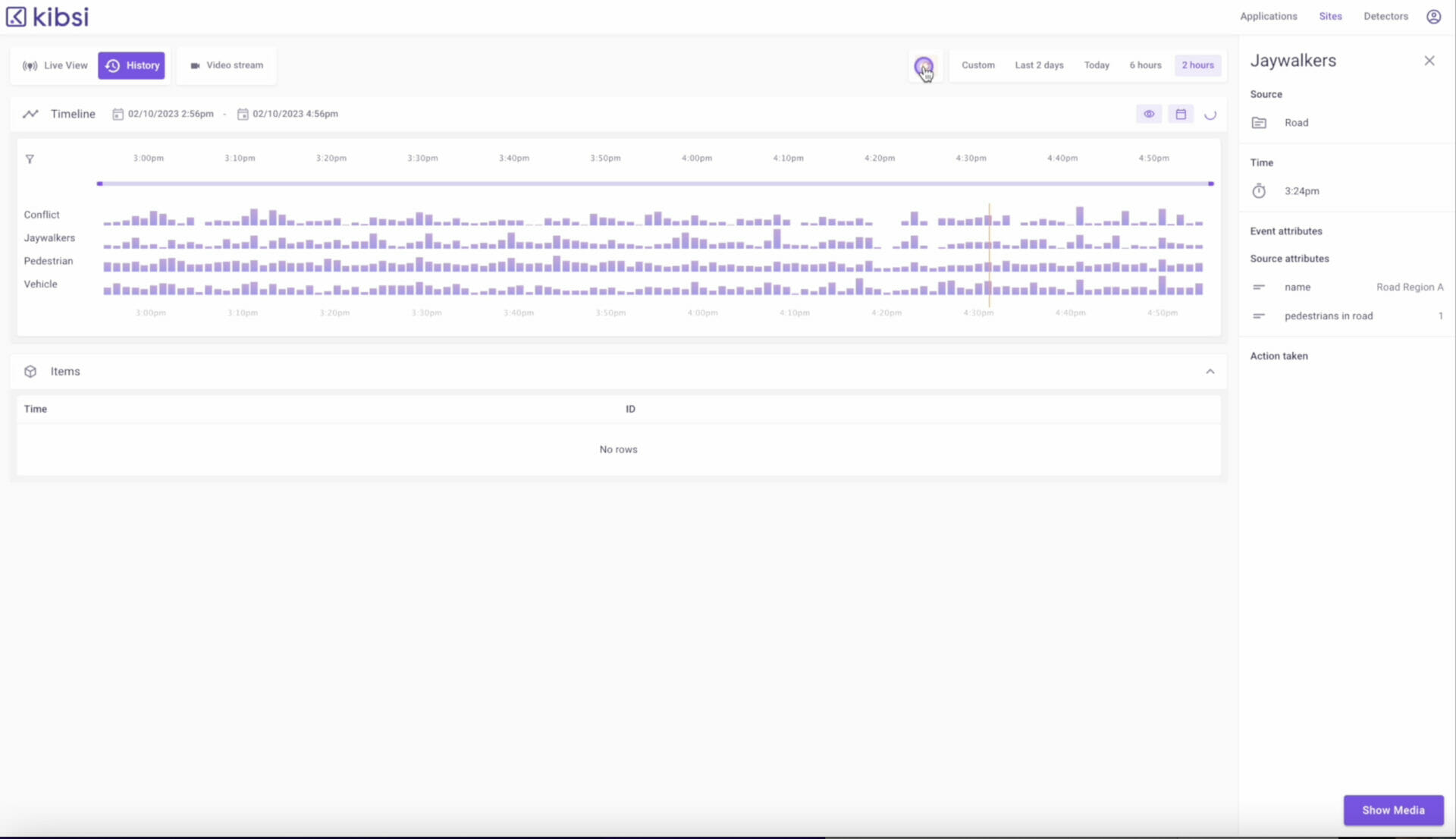

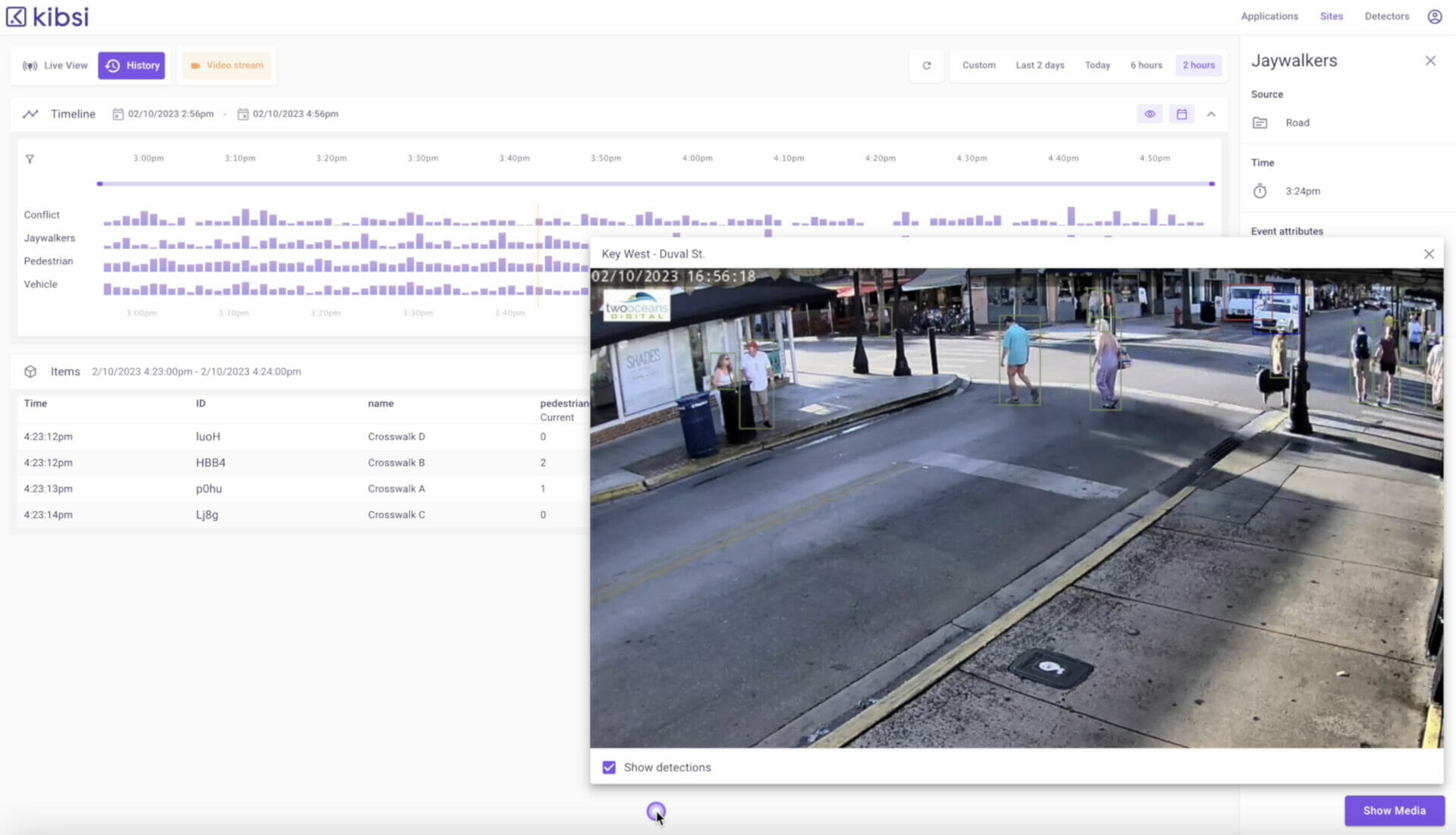

The history view shows aggregated data across several time ranges, from 2 – 48 hours, as well as custom time ranges. The histogram provides an easy way to navigate to the data for a given time slice. Clicking on a slice in the histogram will display the relevant data that was seen during that slice, with a separate view for each item type.

The video stream view allows you to observe the live stream for this deployment and see items detected in real-time to correlate with the data you are seeing in either the live view or history view

.

In this video, we covered all of the core concepts of using the Kibsi platform. When building your applications, remember the most important concept and try to avoid thinking about individual detections. Instead, think about your video stream as a structured data set, and then build your application to define that data model.

Also, be sure to check out the other videos in our Getting Started series.

Until next time, I’m Eric Miller from Kibsi. Thanks for watching!