Why OCR is Just the Starting Point for Smart Logistics

Intro

Ever had a “where the heck is it?” moment in your warehouse? Yeah, not fun. Misplaced packages aren’t just an annoyance—they’re a domino effect of logistical nightmares and customer complaints. Sure, OCR (Optical Character Recognition) can help read those package labels. But hold the applause, because just reading isn’t enough. We need to really understand what those labels are telling us, and for that, OCR falls a bit short.

OCR Basics: The What and the Why

So, you’ve heard of OCR. It’s the tech that turns your scanned invoices and package labels into text that a computer can understand. Neat, right? It’s like the Swiss Army knife for any logistics or manufacturing pro—good for everything from automating invoice entry to tracking goods as they dance through your supply chain.

OCR’s Limitations: The Gotchas

Ah, the fine print. OCR is fantastic at capturing text—it’s basically a pro at it. But it’s not so savvy when it comes to understanding the context of that text. Imagine reading a label that says “screws.” That’s great and all, but are these just any screws? Or are they the ultra-specific ones you need on the production line, like, yesterday? OCR won’t know the difference.

Here’s another thing—OCR is kind of high maintenance. You’ve got to tell it exactly where to look on the label. It’s like guiding a friend through a maze while you’re watching from above. But let’s face it, labels are like snowflakes: no two are the same. One supplier’s “screws” are another’s “fasteners,” or, let’s get creative, “assembly thingamajigs.”

The takeaway? OCR is a great tool, but it’s just the opening act. If you’re serious about automating your logistics or fine-tuning your manufacturing processes, you’ll want to look into smarter, more contextual ways of understanding text. And that’s where the real magic happens.

Why Context Matters: The Details OCR Misses

Let’s talk numbers for a sec. According to a study by Gartner, every year, poor data quality costs organizations an average $12.9 million. One major culprit? You guessed it: OCR errors. Imagine this—your warehouse receives a shipment labeled “nuts.” Simple, right? But are we talking cashews for the breakroom or hex nuts for the assembly line? Without context, you might end up with a very well-fed but nonfunctional assembly line.

How Context Elevates OCR: Beyond Reading to Understanding

This is where context sweeps in like a superhero. With context-aware OCR solutions, you’re not just capturing text; you’re capturing the story behind that text. Say your system identifies the label “nuts” alongside other items like “bolts” and “washers.” Context tells you these are probably destined for the assembly line, not the snack bar. And that’s how you transform OCR from a simple text reader to an intelligent asset that makes real-time decisions, streamlining your workflow and making everyone’s life a tad bit easier.

In a nutshell (pun totally intended), adding context to OCR is like giving it a business degree. It knows what to do, when to do it, and why it’s important. So, while OCR gets the ball rolling, it’s the context that drives it home.

Document Understanding Techniques: The Secret Sauce Behind Smart Labels

Label Parsing: Beyond Basic Text Recognition

Label parsing is like that one friend who reads between the lines when you’re upset but saying you’re “fine.” Just recognizing text? That’s kid’s play. Parsing digs deeper. It’s about identifying key elements like dates, SKUs, or serial numbers and understanding their relationship to the rest of the data on the label. In a world of messy, unstructured labels, parsing is your Sherlock Holmes, making sense out of chaos.

The Challenge of Unstructured Labels

But here’s the rub: labels can be all over the place—literally. One supplier’s “Product ID” might be another’s “Item Code.” Plus, the layout changes more often than a teenager’s social media status. Navigating this maze without a map? Good luck. And this is why OCR alone often falls short. It’s like trying to build IKEA furniture without the instruction manual.

Multi-Phase Approach: The Smart Way Forward

So, how do you crack this nut? Enter the multi-phase approach.

- Initial Scanning: First, you scan the document to capture the text. That’s OCR doing its basic thing.

- Manual Mapping and Its Limitations: Some businesses try to bridge the gap with manual mapping. But let’s be honest, that’s like using a band-aid on a leaky pipe. It’s time-consuming and leaves a margin for human error that’s about as comforting as a wooden chair in a waiting room.

The best practice? Marry OCR with machine learning algorithms to adapt to label variations automatically. Now you’re cooking with gas. This combo takes in raw, unstructured data and spits out organized, actionable insights.

Enter Transformer Models: The Brainiacs of Text Understanding

What are Transformer Models?

Think of Transformer models as the super-smart detectives of the text world. While OCR is like your beat cop who reports what he sees, a Transformer model is the seasoned investigator who puts all the pieces together. These models sift through text, not just reading it but actually grasping the context, relations, and subtle cues.

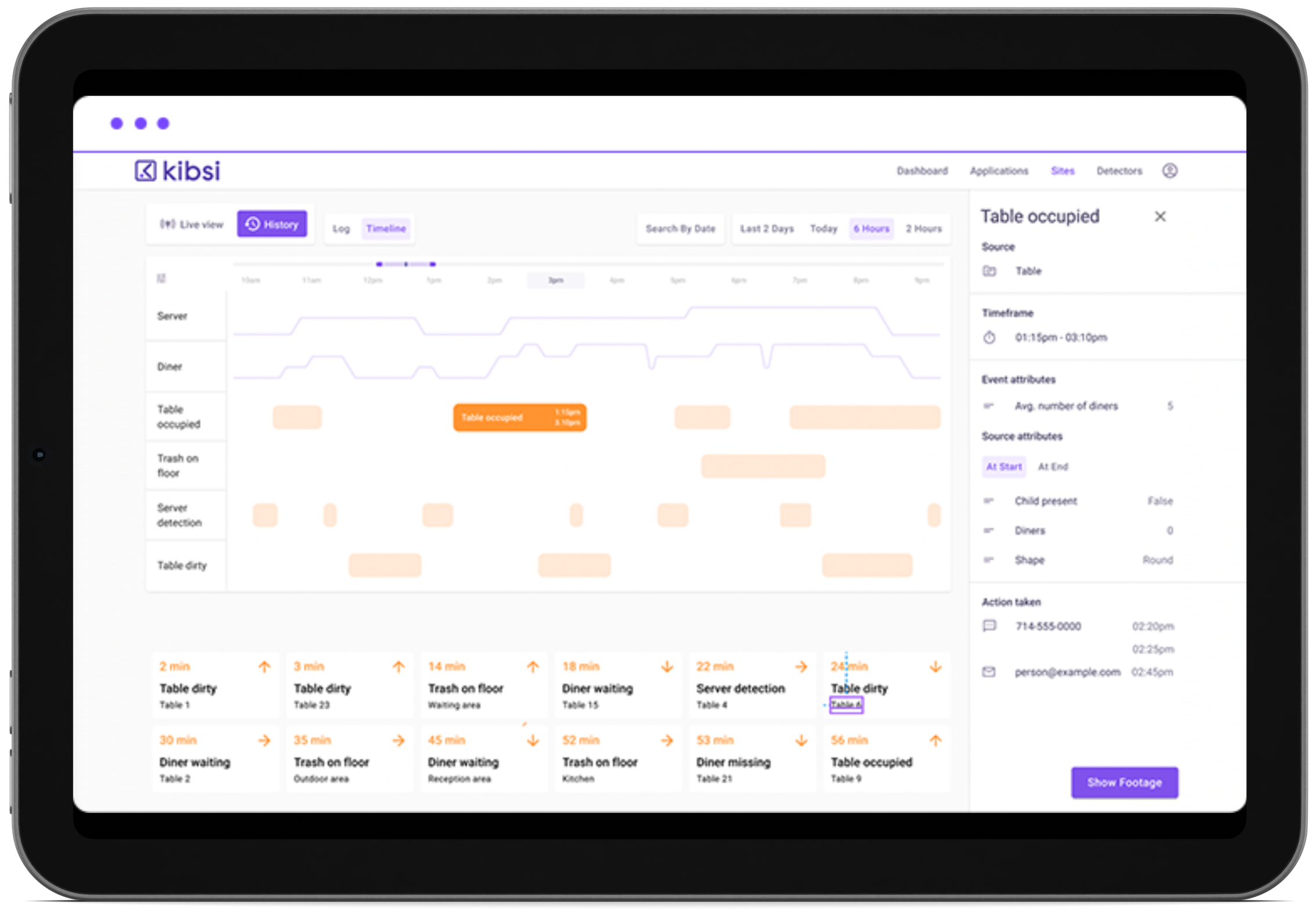

How Kibsi Uses Transformer Models

Let’s be clear: we’re all about solving real-world problems over here. That’s why Kibsi leverages Transformer models. These nifty algorithms help us make sense of labels in a way that’s more nuanced and adaptable. Need to know if that package should be expedited or stored? Our models have your back, converting what they ‘see’ into actionable insights you can actually use.

The Challenge of Overfitting

What is Overfitting?

Ah, overfitting. It’s like that eager new employee who’s so focused on impressing you that they miss the big picture. In technical terms, a model that’s ‘overfit’ has learned the training data too well, and it struggles to generalize to new, unseen data. Amir once mentioned a forklift scenario where the model learned to identify a forklift only from a specific angle. Show it a different perspective, and it’s as confused as a cat in a bathtub.

How to Avoid Overfitting

The secret sauce? Diversity. Just as a balanced diet keeps you healthy, a balanced set of data keeps your model in top shape. Here’s how to do it:

- Use Diverse Data: Train your model on labels from various suppliers, in different formats, and with varied terminology.

- Synthetic Data: When real-world data is scarce, synthetic data is like a gym membership for your model—helping it train in varied conditions.

In the end, it’s about building a model that’s robust, adaptable, and ready for whatever the real world throws at it.

Wrapping It Up: Why This All Matters

Let’s bring it home, folks. Understanding labels isn’t just about reading the words on the box. It’s about knowing where that box fits in the grand scheme of things—be it a critical supply chain, a manufacturing assembly line, or a logistics hub. The nuances, the relationships between terms, and the contextual insights are the game-changers here. If you’re skimming the surface with basic OCR, you’re leaving actionable insights on the table.

Your Next Move: Get the Big Picture with Kibsi

Time for some real talk. If you’re stuck on OCR, you’re playing checkers while the world is playing chess. But hey, it’s never too late to join the game. Kibsi’s platform is your pathway to that next level of document understanding. We’re not just reading the labels; we’re interpreting them, contextualizing them, and turning them into real, actionable business insights.

So, ready to see your documents—and your business—in a new light? Let’s talk.