Kibsi Platform: Detect

Detect anything using our models or yours

With 100’s of built-in detectors, 1000s of standard models, and custom model support; Kibsi means business

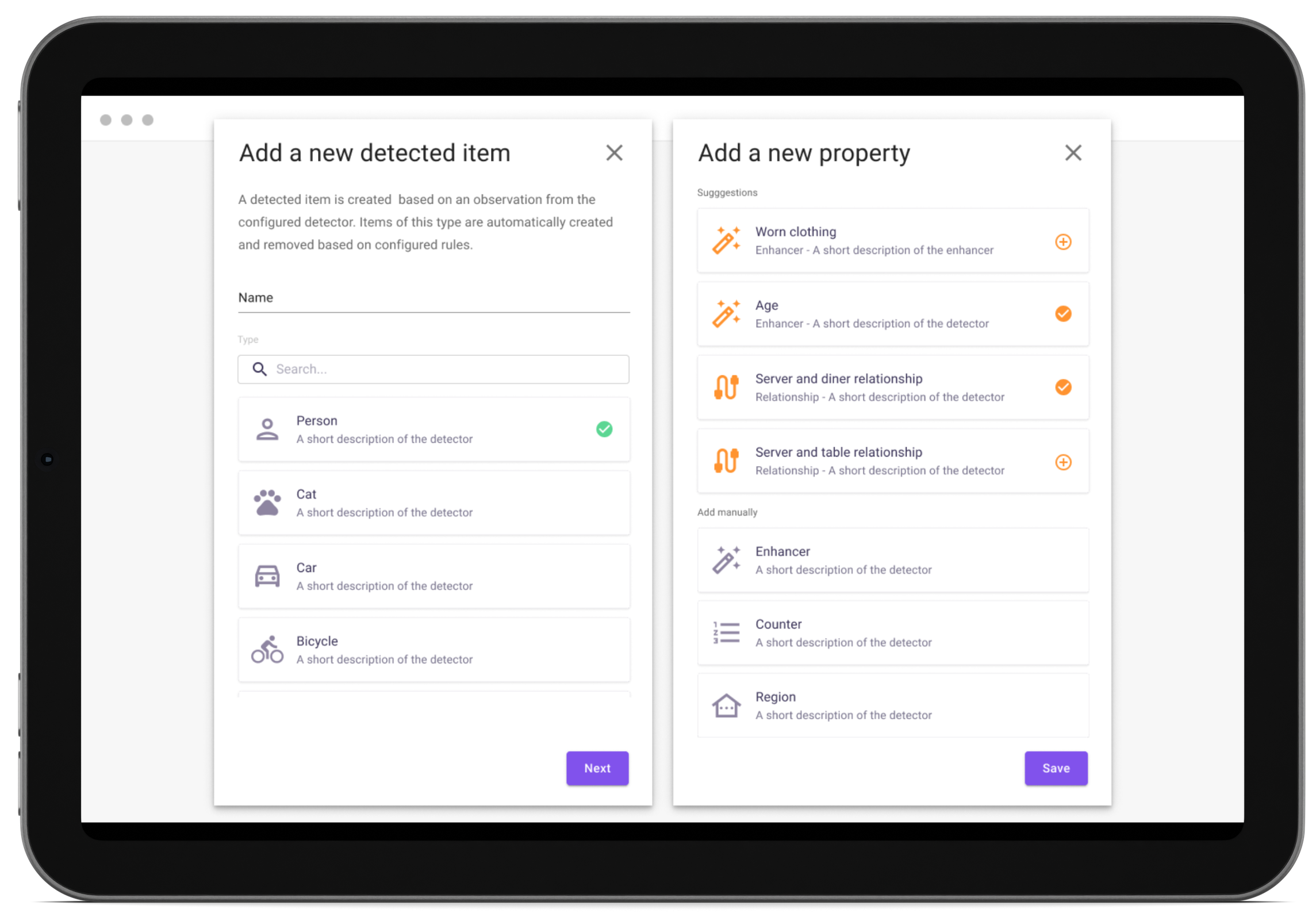

Kibsi models your one-of-a-kind world

Gain contextual understanding of your video stream with thousands of built-in detectors and enhancers that locate, track, and relate objects as they move across a scene. Kibsi is designed to work with your existing cameras, all while respecting privacy.

Imagine a giant warehouse full of built-in detectors

Kibsi comes with built-in computer vision models for thousands of objects and classes, curated to provide state-of-the-art detections.

Compose multiple detectors with simple logic to gain additional insights without the burden of having to train new models.

- People

- Vehicles

- Symbols & logos

- Heavy machinery

- Animals

- Gauges, QR codes, etc.

- Common objects

- And so much more

Your custom models are first class, too

Add custom detectors built on common architectures such as YOLOv5, RESNET, Faster R-CNN, or create completely custom computer vision models built on PyTorch or Tensorflow. Kibsi gives your custom models superpowers with automatic interaction detection and stateful tracking.

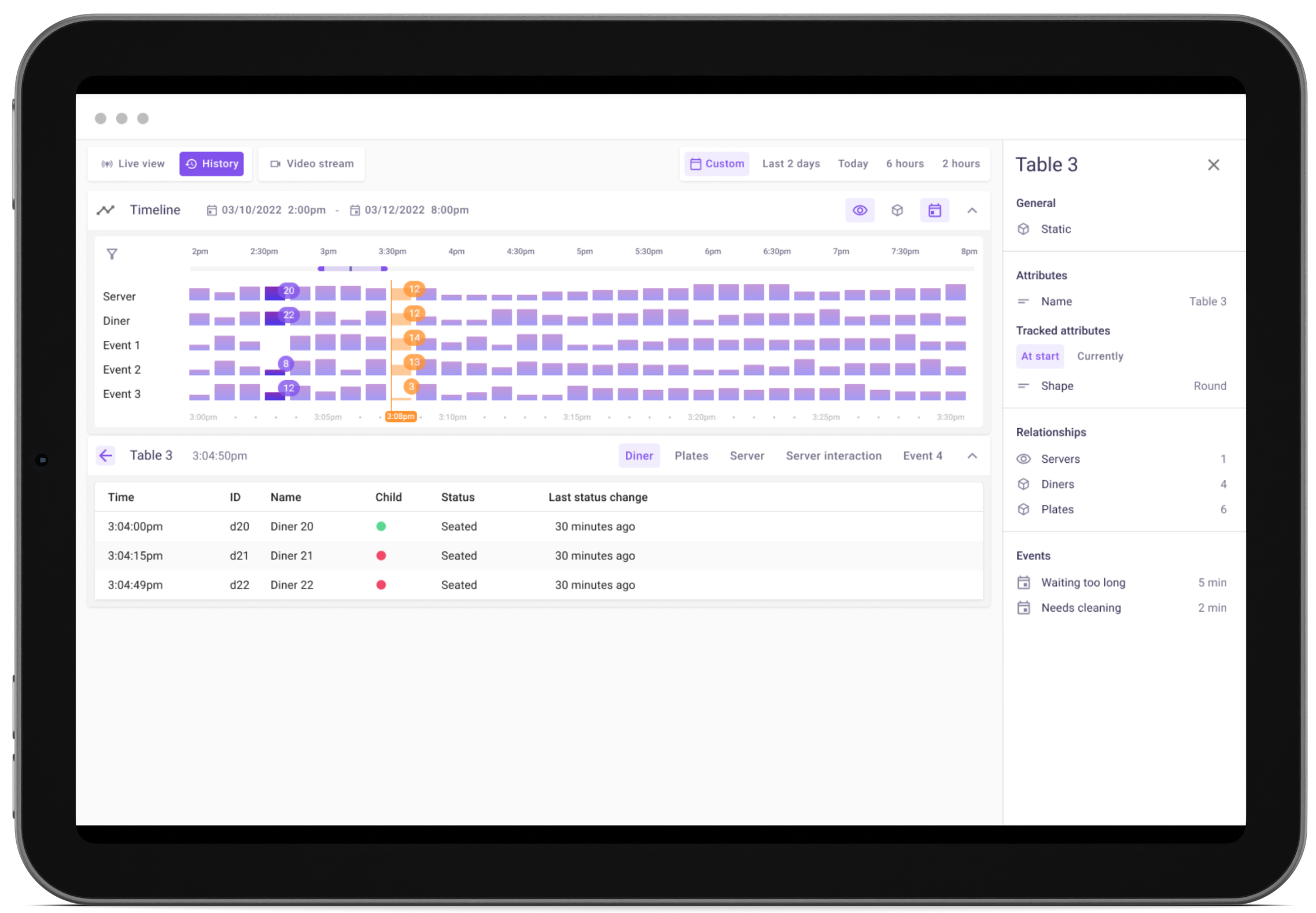

Robust, stateful object tracking

Enhance detections with object attributes. Run additional computer vision models on detected objects to add context and attributes, creating limitless combinations.

Track the movement of people, vehicles, animals and more

Calculate dwell and loiter times for people & movement of objects

Build heatmaps and spaghetti diagrams

Enhance monitoring and analysis with attributes

Handle partial and full occlussion

Learn more about how Kibsi can help

Understand any interaction

- Determine when people are holding, touching, or near an object

- Categorize the nature of interactions between multiple people

- Measure the size of groups, and the arrival and departure of people from a group

- Determine direction and speed of travel

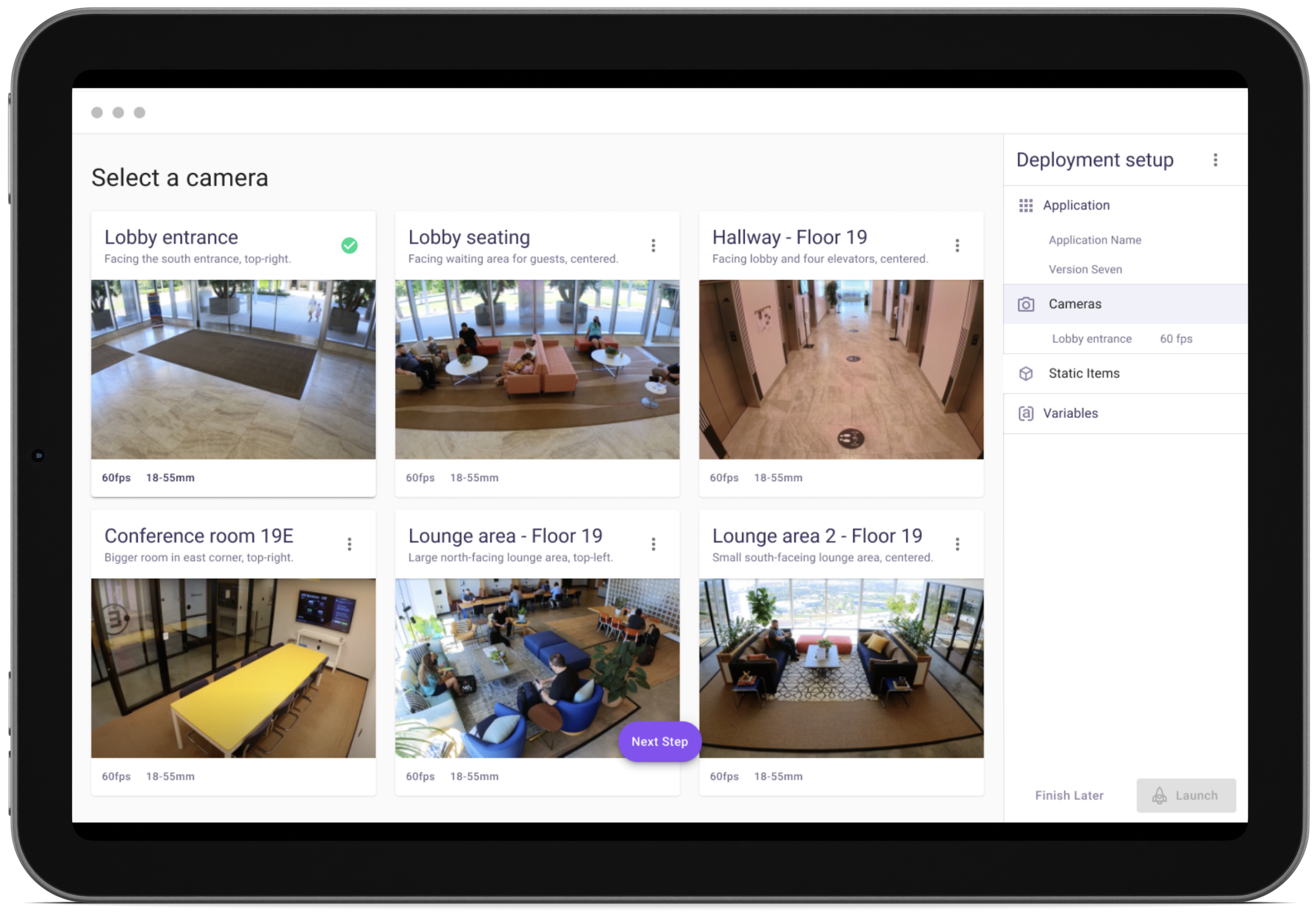

Any camera...anywhere

Kibsi works with your existing camera installations so you can quickly and easily turn your video feeds into actionable insights. Plus, with multi-camera support you’ll be able to see your business from every angle.

Built for Privacy

Kibsi does not detect the identity or identifying features of people, simplifying compliance with privacy laws and policies. Additionally, video data is not retained by Kibsi unless explicitly enabled in response to a detected event.

Kibsi also provides the ability to create workflows for hybrid deployments, which means your video never leaves your premises.

Determine when people are holding, touching, or near an object

Categorize the nature of interactions between multiple people

How Does Kibsi Work in Your World?

Kibsi is designed to flex to your needs, offering real-time alerts, easy customization, and seamless API integration. Whether it’s tracking freight, monitoring manufacturing or keeping queues moving for concessions, Kibsi turns your video feeds into actionable insights. Intrigued? There’s a lot more to explore.

A cutting-edge computer vision platform

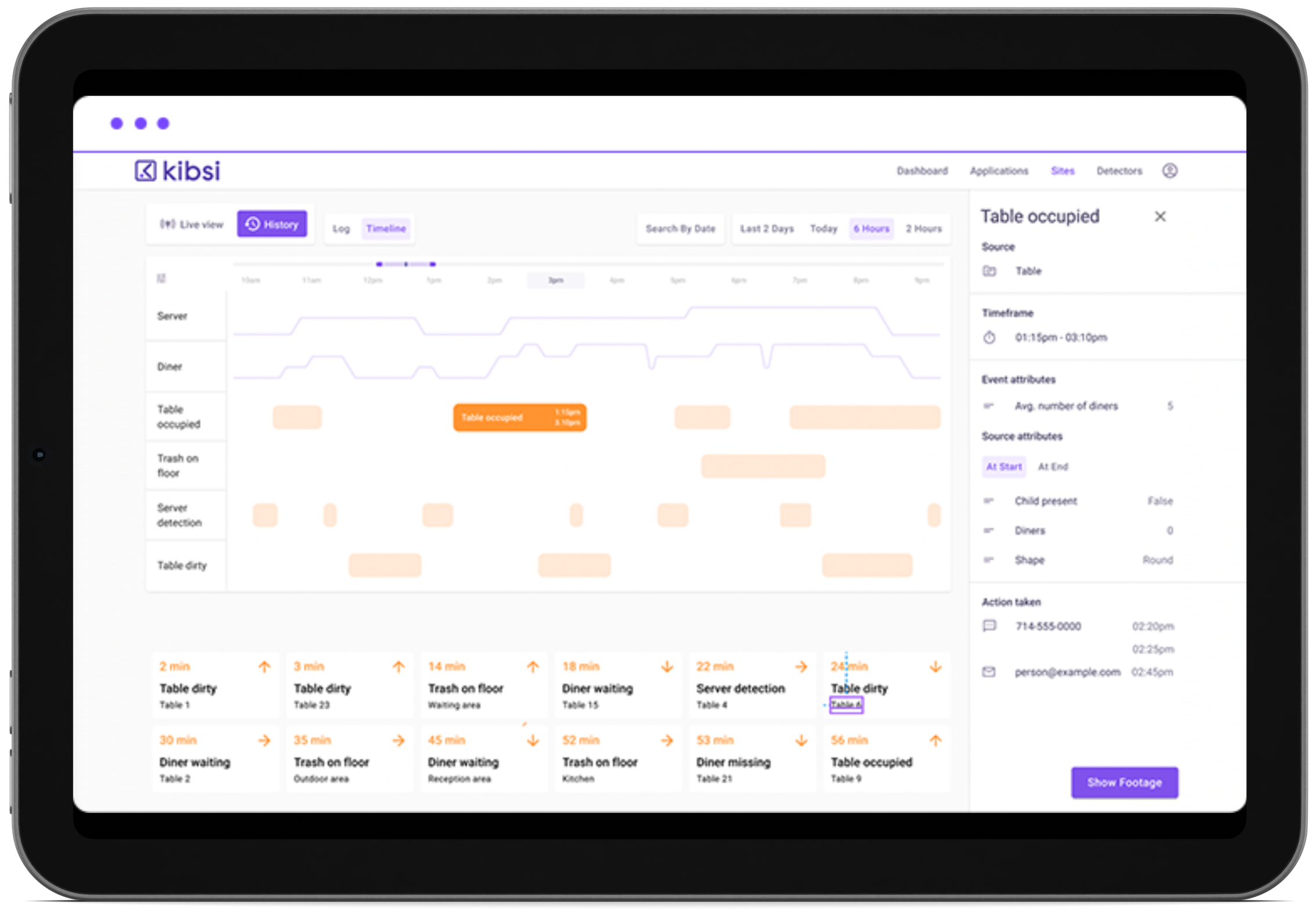

Measure everything

Level-up your analyses with new data and insights that have never been accessible before. Use time-series data from Kbsi to inform business decisions, optimize workflows, and delight customers.

A platform built for all users

A rich canvas to map detections into your business language. Our drag-and-drop interface allows anyone to build computer vision applications.

Point and click deployment

Computer vision outcomes require a lot of components, assembled in just the right way. Kibsi has you covered from development through production, with just a few clicks – no fragile processes to build & maintain.

Building blocks for any use case

Built-in computer vision models for thousands of objects and classes, curated to provide state-of-the-art detections. And of course, you can bring your own custom detectors built on common architectures.

Detections + context for valuable insights

Compose multiple detectors with simple logic to gain additional insights and add context, creating limitless combinations.

An API for the physical world

Treat the world as if it was a relational datastore. Understand the lifecycle, long-term state and interactions of detected objects. It’s like having an API that returns the state of the physical world.